X-Transformer

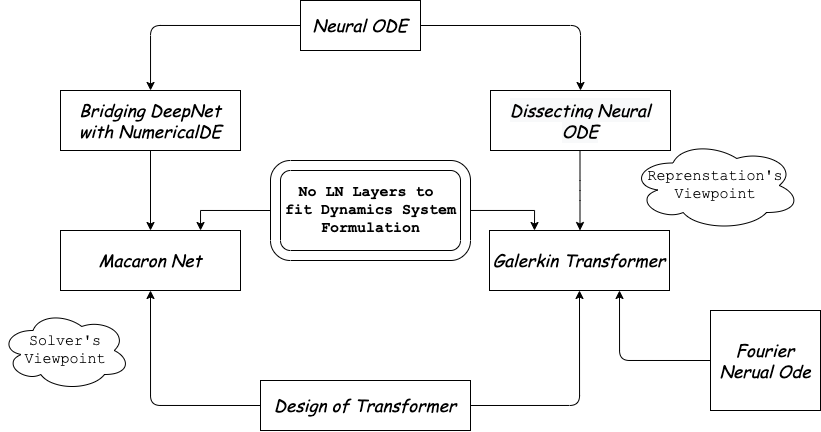

X-Transformer try to connect transformer structure with neural odes, which hints us to treat attention mechanism as a elements in functional space. Previous researches have revealed error controls from numerical methods, Fourier transforms and Galerkin methods from PDE research can indeed improves attention blocks performance, we believes that better structures can be designed with the help with existing mathematics literatures

- Neural ODE - Transformer Progamme